File Store

Transactional Store

The checkpoint based transactional store consists of 2 files:

page.db(database)transaction.log(journal)

It combines linear high-performance random and FIFO access to message pages.

Store Structure

The transactional store works page-oriented with a size of 2 KB. The pages are stored into a RandomAccessFile named page.db. Every message uses at least 1 page. Bigger messages are distributed to several pages. The messages are accessed via a 2-ary index, consisting of the root index and an index per queue. Every queue index contains message keys with the respective message page references. An index is sorted. When inserting a message key into a full index page, a page splitting takes place. The insertion of new elements into an index is always made at the end, the deletion of elements mostly at the beginning of a queue index. Thus, every queue index keeps the first and the last index page within the direct access so that localization is very fast. Also, all index pages are stored within the page.db.

Cache

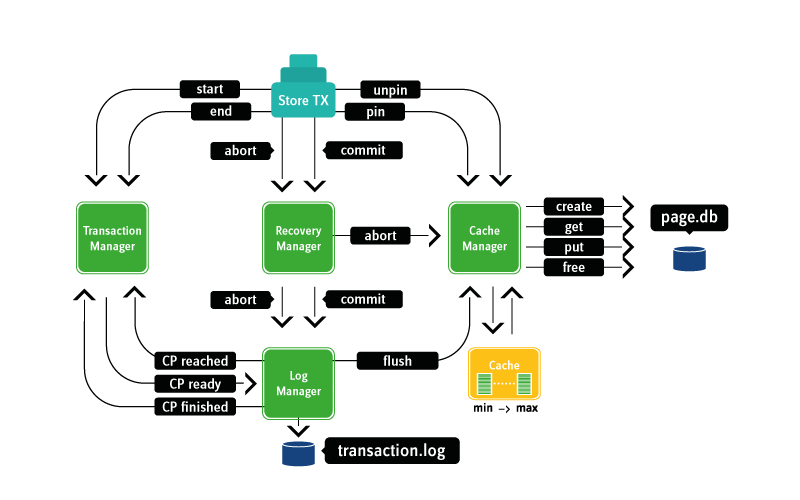

A cache belongs to the transactional store which keeps often-used pages within the main memory. The decision if a page is relocated or not is made on the basis of the hit counts and the last access time. The cache is controlled by a cache manager by which every page access of other components is done. The cache is both read- and write-cache, as pages are flushed onto the disk not until a checkpoint. Through this, a very high speed concerning read- and write transactions is obtained.

Store Transactions

Every access to the transactional store is done in form of store transactions which are controlled by a store transaction manager. A journal belongs to every transaction where any change within a page is listed at the byte level. Page access within a transaction begins with a pin of the page which ensures that the page is not flushed before the transaction is closed.

A commit or a rollback always forms the termination of a transaction. Both actions are performed by a recovery manager who transfers a log record to the log manager. In the case of a rollback, it previously performs a rollback onto the pages within the cache. The log manager works asynchronously with an input queue. Log records are placed into this queue by the recovery manager and are processed asynchronously by the log manager. Because of the asynchronous implementation of the log manager, it is possible to process several log records within one go (so-called group commit). The log manager writes the log record of the transaction into a RandomAccessFile named transaction.log and triggers the transaction. This now makes an unpin of all its pages. Therewith the transaction is finished. In the case of a system failure, all changes may be reconstructed out of the transaction log. This procedure is called "write-ahead logging". Here it is guaranteed that the log record is stored before changes concerning the proper data are written into the "stable store".

Automatic Message Page Referencing

The Store Swiftlet is able to detect if a message has already been stored and automatically references new inserts of the same message within the same transaction (composite transaction) to the already stored message. This is currently supported for non-XA transactions for durable subscribers so that only 1 message is stored for each durable subscriber.

Checkpoint

The synchronization between page.db and transaction.log are made by the log manager when the configured maximum size of the transaction log is reached. The log manager now initializes a so-called checkpoint. This works as follows: the log manager states that the transaction log has reached the maximum size and informs the store transaction manager which now blocks every new transaction request and waits until all current transactions are finished. The store transaction manager now puts a checkpoint-ready event into the log manager queue. This receives the event and informs the cache manager to perform a flush of the whole cache. The cache manager writes all changed pages into the page.db and executes a disk-sync. All data is thus consistent. The log manager now resets the transaction log and forwards a checkpoint finished event to the store transaction manager whereupon it allows again new transactions.

Recovery

A checkpoint is automatically performed while a router is regularly shut down. If the router terminates because of other reasons (processor failure), a recovery phase will run during the startup of the Store Swiftlet, which is made by the recovery manager. It processes the transaction log and produces a consistent state.

XA Transactions

XA transactions are distributed transactions where several resource managers may be involved. A resource manager manages one resource, e.g. a database system or a message server. Hence, SwiftMQ is a resource. The handling of XA transactions belongs to the 2-phase-commit-protocol, in short 2PC. The first phase of this protocol is the prepare phase. For this, the JTA transaction manager (e.g. an application server) sends a prepare request to all resource managers. A resource manager has to guarantee that transaction changes are stored persistently. This is done by writing a prepare log record. The transaction is not yet closed, all locks will remain. The second phase now comprehends either a commit or a rollback. The prepare phase guarantees that all participating resource managers commit or abort their transactions. If a resource manager fails between prepare and commit, the transaction is in a doubtful condition, it is an "in-doubt-transaction". After restarting the resource manager, either the JTA transaction manager instructs the resource manager to perform a commit or a rollback, or it has to be performed manually by administrative functions. Subsequently, the consistency is recreated.

Illustration of the Transactional Store

Swap Store

If a queue cache is full, the swap store serves as a swapping-out of messages. In this case, a RandomAccessFile is created per queue in which messages are swapped-out. The file position, stored within the queue message index, is passed back for any stored message. Thus, the access performance is maximum. Swap files are deleted automatically if all entries are marked as deleted as well as during a router shutdown. Concerning swap files, a roll-over size may be specified which ensures that files may not grow too large. When reaching this size, a new swap file is created and the previous one persists until it is empty.

Durable Subscriber Store

Durable subscriber definitions are stored in single files. The file name contains the client-id and the durable subscriber name.